Deep learning is an innovative technology that has revolutionized the field of artificial intelligence. However, one question that arises is whether deep learning can learn from its mistakes. In this article, we will explore the concept of deep learning and delve into whether it has the ability to learn from errors and improve its performance.

What is deep learning?

Deep learning is a subset of machine learning that involves the use of artificial neural networks to analyze and process large amounts of data. These neural networks are designed to simulate the way the human brain works, allowing them to learn and improve over time through experience.

As an AI expert at Prometheuz, I often find myself explaining the differences between machine learning and deep learning to clients who are new to the field. While both involve training algorithms on large datasets, deep learning takes things a step further by using multiple layers of interconnected nodes in its neural networks. This allows for more complex decision-making and pattern recognition capabilities.

Some common applications of deep learning include image and speech recognition, natural language processing, and autonomous driving systems. As the amount of data generated by these technologies continues to grow, so too does the need for advanced machine learning techniques like deep learning.

How does deep learning work?

At its core, deep learning involves training a neural network on a large dataset in order to identify patterns or make predictions about new data. The network consists of multiple layers of interconnected nodes (or “neurons”), each with its own set of weights that determine how strongly it responds to input from other neurons.

During training, the network adjusts these weights based on how well it performs on a given task (such as identifying objects in an image). This process is repeated many times until the network’s performance reaches a satisfactory level.

One key advantage of deep learning is its ability to automatically extract relevant features from raw data without requiring manual feature engineering by humans. This makes it well-suited for tasks like image recognition where traditional computer vision techniques can be difficult or impractical.

Can deep learning learn from mistakes?

Yes! In fact, one of the main strengths of deep learning is its ability to learn from mistakes and improve over time through a process called backpropagation. During training, the network adjusts its weights based on how well it performed on a given task, with the goal of minimizing the difference between its predicted output and the actual output.

If the network makes a mistake during this process (such as misidentifying an object in an image), the error is propagated backwards through the network to adjust the weights of all the neurons that contributed to that mistake. This allows the network to learn from its mistakes and make more accurate predictions in future tasks.

Why is it important for machines to learn from mistakes?

Learning from mistakes is an essential part of any intelligent system, whether human or machine. By analyzing past failures and adjusting their behavior accordingly, machines can improve their performance over time and become more reliable and effective at their tasks.

In some cases, such as medical diagnosis or autonomous driving systems, learning from mistakes can be a matter of life or death. By continually improving their accuracy and reducing errors, these systems can help prevent accidents and save lives.

Additionally, learning from mistakes can help machines adapt to changing environments or unexpected situations. By recognizing patterns in past failures and adjusting their behavior accordingly, machines can become more flexible and better able to handle new challenges.

What are the benefits of deep learning?

There are many potential benefits to using deep learning in AI applications. Some of these include:

– Improved accuracy: Deep learning algorithms have been shown to outperform traditional machine learning techniques on many tasks, including image recognition and natural language processing.

– Faster processing: Because deep learning algorithms are designed to run on specialized hardware like GPUs, they can process large amounts of data much faster than traditional CPUs.

– Automated feature extraction: Deep learning algorithms are able to automatically extract relevant features from raw data without requiring manual feature engineering by humans.

– Adaptability: Deep learning algorithms are able to learn from new data and adapt their behavior accordingly, making them well-suited for tasks where the input data may be constantly changing (such as speech recognition).

How do we measure the performance of a deep learning algorithm?

There are several common metrics used to evaluate the performance of a deep learning algorithm. These include:

– Accuracy: This measures how often the algorithm correctly predicts the correct output for a given input.

– Precision and recall: These measures are used in binary classification tasks to quantify how many true positives (items correctly identified as positive) and false positives (items incorrectly identified as positive) the algorithm produces.

– F1 score: This is a weighted average of precision and recall that takes into account both false positives and false negatives.

– Mean squared error: This measures how far off the algorithm’s predicted values are from the actual values in regression tasks.

In addition to these metrics, it’s important to consider factors like computational efficiency and scalability when evaluating a deep learning algorithm.

What happens when a deep learning algorithm makes a mistake?

When a deep learning algorithm makes a mistake, it typically adjusts its weights through backpropagation in order to reduce the error on future tasks. The specific adjustments made depend on the nature of the mistake and the architecture of the network.

For example, if an image recognition system misidentifies a dog as a cat, it might adjust its weights to better differentiate between these two types of animals based on features like fur color and shape. Over time, this should lead to fewer errors on similar tasks.

It’s worth noting that not all mistakes made by deep learning algorithms can be corrected through backpropagation alone. In some cases, additional training data or changes to the network architecture may be necessary to address persistent errors.

Can we correct mistakes made by a deep learning algorithm?

Yes – in fact, correcting mistakes is an essential part of training any machine learning algorithm. By analyzing past failures and adjusting their behavior accordingly, deep learning algorithms can improve their accuracy and reduce errors over time.

There are several techniques that can be used to correct mistakes made by a deep learning algorithm. These include:

– Adjusting the network architecture: Changing the number or arrangement of neurons in a network can sometimes help address persistent errors.

– Adding more training data: Providing the algorithm with more examples of similar tasks can help it better generalize to new situations and reduce errors.

– Fine-tuning parameters: Tweaking parameters like learning rate or regularization strength can sometimes help improve performance on specific tasks.

It’s worth noting, however, that not all mistakes made by deep learning algorithms can be corrected through these techniques alone. In some cases, additional changes to the network architecture or training data may be necessary to achieve satisfactory performance.

What techniques can be used to improve the accuracy of a deep learning model?

There are many techniques that can be used to improve the accuracy of a deep learning model. Some of these include:

– Increasing the size of the training dataset: Providing the algorithm with more examples of similar tasks can help it better generalize to new situations and reduce errors.

– Tuning hyperparameters: Parameters like learning rate, batch size, and regularization strength can have a big impact on model performance, so it’s important to experiment with different settings to find optimal values.

– Using transfer learning: This involves using pre-trained models as a starting point for new tasks, allowing them to leverage knowledge learned from previous datasets.

– Regularization: Techniques like dropout or L1/L2 regularization can help prevent overfitting and improve generalization performance.

– Model ensembling: Combining multiple models (either trained on different subsets of data or using different architectures) can often lead to improved performance through diversity.

It’s worth noting that improving model accuracy is often an iterative process that involves experimentation and fine-tuning over time.

Are there any limitations to what a deep learning algorithm can learn from its mistakes?

While deep learning algorithms are capable of learning from mistakes and improving their performance over time, there are some limitations to what they can accomplish. Some of these include:

– Limited data availability: Deep learning algorithms require large amounts of labeled training data in order to perform well. If this data is not available or is biased in some way, the algorithm may struggle to generalize to new situations.

– Overfitting: If a model becomes too complex or is trained for too long on a limited dataset, it may start to memorize specific examples rather than generalizing to new situations. This can lead to poor performance on previously unseen data.

– Complexity: Deep learning models can be extremely complex and difficult to interpret, making it challenging for humans to understand how they are making decisions or identify sources of error.

– Lack of common sense: While deep learning algorithms excel at recognizing patterns in data, they often lack the common sense reasoning abilities that humans possess. This can make it difficult for them to handle tasks that require contextual understanding or abstract reasoning.

How do we ensure that the data used to train a deep learning model is accurate and unbiased?

Ensuring that training data is accurate and unbiased is essential for building effective deep learning models. Some techniques that can be used include:

– Data cleaning: Before training a model, it’s important to thoroughly clean and preprocess the input data in order to remove errors or inconsistencies.

– Data augmentation: This involves generating additional training examples through techniques like rotation, scaling, or cropping. This can help reduce bias by providing more diverse examples of each class.

– Balancing classes: If one class has significantly more examples than another, this can lead to bias in the model’s predictions. Balancing the number of examples per class can help prevent this issue.

– Using diverse datasets: It’s important to use datasets that are representative of the real-world situations the model will be used in. This can help prevent bias and ensure that the model is able to generalize to new situations.

– Regularly re-evaluating data: As models are trained on new data, it’s important to regularly evaluate its performance and check for sources of bias or error.

Can humans learn from their mistakes in the same way that machines can through deep learning algorithms?

Yes! Learning from mistakes is an essential part of human intelligence as well as machine intelligence. By analyzing past failures and adjusting our behavior accordingly, we can improve our skills and become more effective at our tasks over time.

One key difference between human learning and machine learning is that humans are often able to incorporate additional information beyond just the raw data. For example, we may use prior knowledge or intuition to make decisions, while machines rely solely on what they have learned from training data.

Additionally, humans are often better able to handle tasks that require abstract reasoning or contextual understanding – areas where current deep learning algorithms still struggle. However, as AI technology continues to advance, it’s possible that machines may eventually catch up in these areas as well.

Have there been any notable examples where a deep learning algorithm has learned from its mistakes and improved its performance over time?

There have been many examples of deep learning algorithms improving their performance over time through experience. One notable example is AlphaGo, a program developed by Google DeepMind to play the board game Go.

When AlphaGo was first introduced in 2015, it was able to beat some of the world’s best human players but still made significant errors in gameplay. However, through a process of reinforcement learning (in which it played millions of games against itself), AlphaGo was able to improve its performance dramatically within just a few months.

In 2017, AlphaGo played a series of matches against the world’s top-ranked player, Ke Jie, and won all three games. This demonstrated the power of deep learning algorithms to learn from mistakes and improve their performance over time through experience.

How do we ensure that our use of AI and machine learning technologies are ethical and responsible, especially as they become more advanced and autonomous in decision-making processes?

As AI and machine learning technologies become more advanced and autonomous in decision-making processes, it’s important to ensure that they are used ethically and responsibly. Some steps that can be taken include:

– Developing ethical guidelines: Organizations should establish clear ethical guidelines for the development and use of AI technologies, taking into account factors like privacy, transparency, fairness, and accountability.

– Regular audits: Regular audits should be conducted on AI systems to identify potential sources of bias or error. These audits should be transparent and accessible to stakeholders.

– Human oversight: While some tasks may be fully automated by AI systems, it’s important to have human oversight in place for critical decisions or situations where the AI system may not have enough information.

– Training data selection: Careful attention should be paid to the selection of training data in order to prevent bias or discrimination. Data should be representative of diverse populations whenever possible.

– Continuous monitoring: As AI systems are deployed in real-world situations, it’s important to continuously monitor their performance and address any issues that arise.

What future developments can we expect in the field of deep learning, particularly in terms of how machines learn from their mistakes?

As deep learning continues to advance, we can expect several key developments in how machines learn from their mistakes. Some possibilities include:

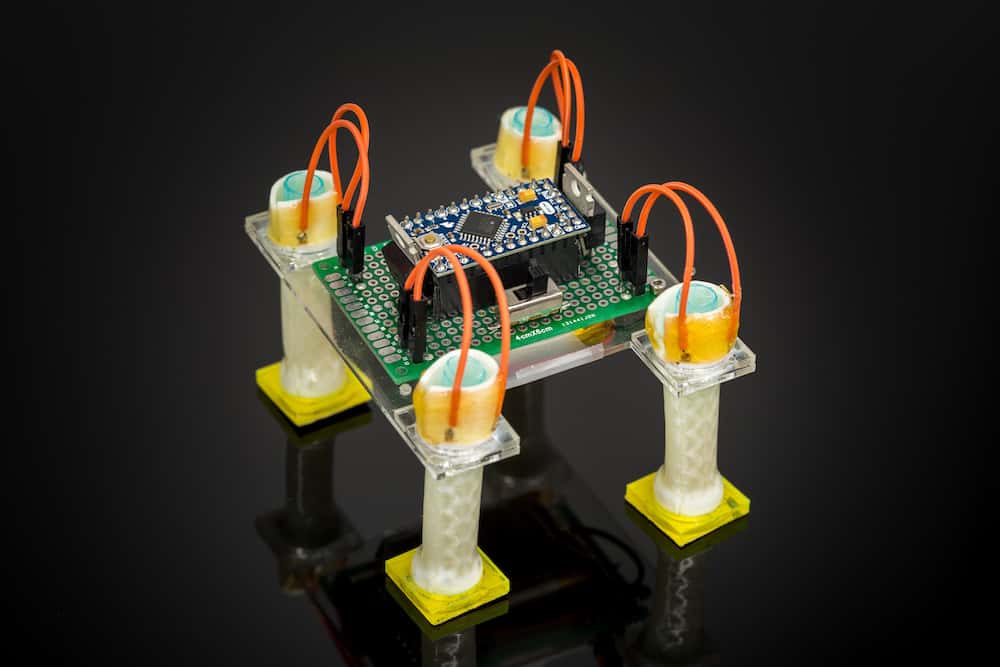

– Reinforcement learning: This involves training algorithms through a process of trial-and-error feedback rather than just supervised or unsupervised learning. Reinforcement learning has already been used successfully in applications like game playing and robotics.

– Transfer learning: This involves using pre-trained models as a starting point for new tasks, allowing them to leverage knowledge learned from previous datasets. Transfer learning has already been used successfully in applications like image recognition and natural language processing.

– Explainable AI: As deep learning models become more complex and difficult to interpret, there is a growing need for techniques that can help humans understand how they are making decisions. Explainable AI is an emerging field that aims to address this issue.

– Active learning: This involves having the algorithm select which examples it wants to learn from rather than just passively receiving labeled data. Active learning has the potential to reduce the amount of training data needed while still achieving high performance.

Overall, the future of deep learning looks bright as researchers continue to explore new techniques and applications for these powerful algorithms.

In conclusion, deep learning does indeed learn from mistakes. This is made possible through the use of advanced algorithms that enable the system to continuously improve its performance over time. If you’re interested in exploring the many benefits of AI and deep learning for your business, we invite you to get in touch with us today. Our team of experts can help you harness the power of these cutting-edge technologies to achieve your goals and drive success. So why wait? Contact us now and discover what our AI services can do for you!

Does deep learning learn from its experience?

Deep learning networks use complex structures to learn from the data they encounter. These networks are composed of multiple layers of processing, allowing them to create multiple levels of abstraction to represent the data.

Does machine learning learn from mistakes?

Reinforcement learning is a form of machine learning that allows a machine to learn from its mistakes, much like humans do. This type of machine learning involves the machine solving problems through trial and error.

https://www.researchgate.net/profile/Chris-De-Blois/publication/329828872/figure/fig3/AS:1174774218784774@1657099408495/Density-plot-of-the-error-between-estimated-and-original-data-D-c-eqn-51-and-the_Q640.jpg

Is deep learning a part of machine learning True or false?

Deep learning is a branch of machine learning that drives the most advanced and human-like forms of artificial intelligence. Although both are categorized under the umbrella of AI, deep learning is a specialized subset.

What is the flaw of deep learning?

A major drawback of deep learning is its characteristic of being a black box where the operations that occur between the input and output are unknown. While this may not be an issue initially, it can lead to unexpected and problematic situations.

Is deep learning based on the brain?

Deep learning is a type of machine learning that involves a neural network consisting of at least three layers. The purpose of the neural network is to replicate the functions of the human brain, although it does not perform as well. By utilizing vast amounts of data, it is able to “learn” through this process.

What is deep learning mainly based on?

Artificial neural networks, particularly convolutional neural networks, are the foundation of most contemporary deep learning models. However, deep generative models like deep belief networks and deep Boltzmann machines can also incorporate propositional formulas or latent variables arranged in layers.