Neural networks have been touted as a game-changer in the field of artificial intelligence, but do they really work? This question has been the subject of much debate and research in recent years. In this article, we will explore the effectiveness of neural networks and what makes them so powerful.

What are neural networks?

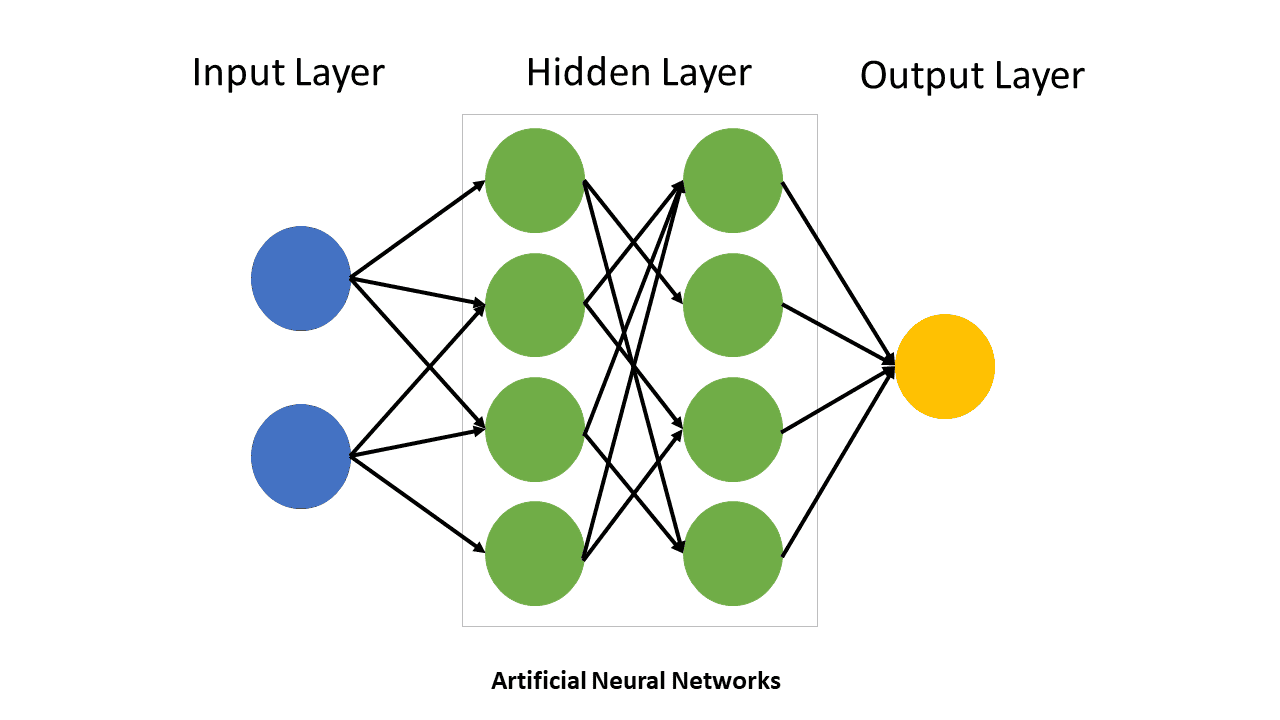

Neural networks are a subset of machine learning algorithms that are designed to mimic the functionality of the human brain. They are composed of interconnected nodes, called neurons, which process and transmit information through a network of layers. Each neuron receives input from other neurons, processes it using an activation function, and then sends output to other neurons in the next layer.

The architecture of neural networks can vary widely depending on the problem they are designed to solve. Some neural networks have only a few layers while others can have hundreds or even thousands. The most common types of neural networks include feedforward neural networks, recurrent neural networks, and convolutional neural networks.

Neural networks have become increasingly popular in recent years due to their ability to learn complex patterns and relationships in data without being explicitly programmed. This makes them well-suited for tasks like image recognition, natural language processing, and predictive modeling.

The History of Neural Networks

The concept of artificial intelligence dates back to the 1950s when computer scientists first began exploring ways to create machines that could think like humans. Neural networks emerged as a promising approach in the 1980s when researchers demonstrated their ability to learn from data without being explicitly programmed.

One of the early pioneers in this field was Frank Rosenblatt who developed the perceptron algorithm in 1957. This algorithm was capable of learning simple patterns by adjusting its weights based on feedback from its environment. However, it was limited in its ability to handle more complex problems.

In the decades that followed, researchers continued to refine and develop new types of neural network architectures. Today, deep learning has emerged as one of the most powerful forms of machine learning thanks to advancements in computing power and data availability.

How do neural networks work?

Neural networks work by processing input data through a series of interconnected layers that contain neurons. Each neuron receives input from other neurons, processes it using an activation function, and then sends output to other neurons in the next layer. This process continues until the output layer produces a final result.

The weights and biases of each neuron are adjusted during training so that the network can learn to recognize patterns and make accurate predictions. This is typically done using backpropagation, a technique that involves calculating the error between the predicted output and the actual output, and then adjusting the weights and biases accordingly.

One of the key advantages of neural networks is their ability to learn complex patterns and relationships in data without being explicitly programmed. This makes them well-suited for tasks like image recognition, natural language processing, and predictive modeling.

Activation Functions

Activation functions are used by neurons in neural networks to introduce nonlinearity into the model. Without an activation function, a neural network would simply be a linear regression model. There are many different types of activation functions available including sigmoid, tanh, ReLU, LeakyReLU, PReLU etc.

Sigmoid is one of the most commonly used activation functions because it maps any input value to a value between 0 and 1. Tanh is similar to sigmoid but maps values between -1 and 1 instead. ReLU (Rectified Linear Unit) is another popular activation function that sets all negative values to zero while leaving positive values unchanged.

What is the purpose of neural networks?

The purpose of neural networks is to learn patterns and relationships in data without being explicitly programmed. They are designed to mimic the functionality of the human brain by processing input data through interconnected layers of neurons.

Neural networks have become increasingly popular in recent years due to their ability to solve complex problems that were previously impossible or difficult for traditional machine learning algorithms. For example, they can be used for image recognition tasks like identifying objects in photos or videos with high accuracy. They can also be used for natural language processing tasks like sentiment analysis or language translation.

In addition to these applications, neural networks are also used in predictive modeling tasks like financial forecasting, disease diagnosis, and fraud detection. They are well-suited for these tasks because they can learn from historical data and make accurate predictions based on that data.

Applications of Neural Networks

Neural networks have a wide range of applications across various industries. Some of the most common applications include:

– Image recognition: Neural networks can be used to identify objects in photos or videos with high accuracy.

– Natural language processing: Neural networks can be used for tasks like sentiment analysis, language translation, and speech recognition.

– Predictive modeling: Neural networks can be used to make accurate predictions about future events based on historical data.

– Fraud detection: Neural networks can be used to detect fraudulent activity in financial transactions or other areas.

– Disease diagnosis: Neural networks can be used to diagnose diseases based on medical imaging or other types of data.

What types of problems can neural networks solve?

Neural networks are well-suited for solving complex problems that involve large amounts of data and require the ability to recognize patterns and relationships. Some of the most common types of problems that neural networks can solve include:

– Image recognition: Neural networks can be trained to identify objects in photos or videos with high accuracy.

– Natural language processing: Neural networks can be used for tasks like sentiment analysis, language translation, and speech recognition.

– Predictive modeling: Neural networks can be used to make accurate predictions about future events based on historical data.

– Anomaly detection: Neural networks can be used to detect unusual patterns or behavior in large datasets.

– Recommendation systems: Neural networks can be used to recommend products or services based on user preferences.

Limitations of Neural Networks

While neural networks are powerful tools for solving complex problems, they do have some limitations. Some of the most common limitations include:

– Overfitting: Neural networks can sometimes overfit to the training data, which can lead to poor performance on new data.

– Lack of transparency: Neural networks can be difficult to interpret or understand, which can make it challenging to identify errors or biases in the model.

– Computational complexity: Neural networks can be computationally expensive to train and run, especially for large datasets.

– Limited sample size: Neural networks require large amounts of data to learn patterns and relationships effectively. If the dataset is too small, the model may not perform well.

How are neural networks trained?

Neural networks are typically trained using a process called backpropagation. This involves calculating the error between the predicted output and the actual output, and then adjusting the weights and biases of each neuron in the network so that they produce a more accurate prediction.

The training process involves feeding input data into the network and comparing its predicted output with the actual output. The difference between these two values is known as the error or loss. The goal of training is to minimize this error by adjusting the weights and biases of each neuron in the network.

Once a neural network has been trained on a set of input/output pairs, it can be used to make predictions on new input data that it has not seen before.

Hyperparameter Tuning

Hyperparameters are parameters that are set prior to training a neural network. They include things like learning rate, number of layers, number of neurons per layer etc. Hyperparameter tuning involves selecting optimal values for these parameters in order to improve model performance.

There are many different techniques for hyperparameter tuning including grid search, random search, Bayesian optimization etc. Grid search involves exhaustively searching all possible combinations of hyperparameters while random search involves randomly sampling from a predefined hyperparameter space. Bayesian optimization involves using a probabilistic model to guide the search for optimal hyperparameters.

What is the accuracy of neural networks?

The accuracy of neural networks can vary widely depending on the problem they are designed to solve and the quality of the data used to train them. In general, neural networks are capable of achieving high levels of accuracy on a wide range of tasks.

For example, convolutional neural networks (CNNs) have achieved state-of-the-art performance on image recognition tasks like object detection and classification. Recurrent neural networks (RNNs) have achieved high levels of accuracy on natural language processing tasks like language translation and sentiment analysis.

However, it’s important to note that neural networks are not perfect and can still make errors or produce biased results. It’s also important to evaluate the performance of a neural network on a test set that it has not seen before in order to get an accurate estimate of its true accuracy.

Evaluating Model Performance

There are many different metrics that can be used to evaluate the performance of a neural network model including accuracy, precision, recall, F1 score etc. Accuracy measures the percentage of correctly classified instances while precision measures the proportion of true positives among all positive predictions. Recall measures the proportion of true positives among all actual positives while F1 score is a weighted average of precision and recall.

It’s important to select an appropriate evaluation metric based on the specific problem being solved and the desired outcome. For example, in medical diagnosis applications, it may be more important to maximize recall (i.e., minimize false negatives) than to maximize overall accuracy.

Can neural networks be used for image recognition?

Yes, neural networks can be used for image recognition tasks like object detection and classification. Convolutional Neural Networks (CNNs) are particularly well-suited for these types of tasks because they are designed to recognize spatial patterns in data.

CNNs work by applying a set of filters to the input image that detect specific features like edges, corners, and textures. These features are then combined and processed through multiple layers of neurons until the final output layer produces a prediction about what object is present in the image.

CNNs have achieved state-of-the-art performance on a wide range of image recognition tasks including object detection, face recognition, and scene understanding. They have also been used for applications like self-driving cars, medical imaging, and surveillance systems.

Transfer Learning

Transfer learning is a technique used to improve the performance of neural networks on new tasks by leveraging knowledge learned from previous tasks. For example, if a CNN has been trained to recognize objects in photos, it may be possible to use this pre-trained model as a starting point for a new task like identifying different types of animals.

This can be done by freezing the weights of some or all of the layers in the pre-trained model and then fine-tuning them on the new task using a smaller dataset. This approach can save time and computational resources while also improving overall model performance.

Can neural networks be used for natural language processing?

Yes, neural networks can be used for natural language processing (NLP) tasks like sentiment analysis, language translation, speech recognition etc. Recurrent Neural Networks (RNNs) are particularly well-suited for these types of tasks because they are designed to process sequential data like text or speech.

RNNs work by processing one word at a time and maintaining an internal state that captures information about previous words. This allows them to capture long-term dependencies between words and produce more accurate predictions about things like sentiment or translation.

RNNs have achieved state-of-the-art performance on many NLP tasks including machine translation, text summarization, question answering etc. They have also been used for applications like speech recognition, chatbots, and virtual assistants.

Word Embeddings

Word embeddings are a technique used to represent words as vectors in a high-dimensional space. This allows neural networks to process text data more effectively by capturing semantic relationships between words.

There are many different types of word embeddings available including Word2Vec, GloVe, and FastText. These embeddings can be pre-trained on large datasets or learned from scratch using the training data for a specific task.

Once word embeddings have been generated, they can be used as input to neural networks for tasks like sentiment analysis or language translation. This approach has been shown to improve model performance and reduce the amount of training data required.

Are there any limitations to using neural networks?

Neural networks have proven to be incredibly powerful tools for solving complex problems in various fields such as computer vision, speech recognition, and natural language processing. However, like any other technology, they have their limitations. One of the major limitations of neural networks is their tendency to overfit or underfit data. Overfitting occurs when the model becomes too complex and starts to memorize the training data rather than learning from it. On the other hand, underfitting occurs when the model is too simple and fails to capture the underlying patterns in the data.

Another limitation of neural networks is their black-box nature. Neural networks are often referred to as black boxes because it can be challenging to understand how they arrive at their decisions. This lack of interpretability can make it difficult for researchers and practitioners to trust the results produced by these models. Additionally, training large neural networks can be computationally expensive and time-consuming, making them less practical for some applications.

Limitations of Neural Networks:

1. Overfitting

Overfitting occurs when a model becomes too complex and starts to memorize the training data rather than learning from it.

2. Black-Box Nature

The lack of interpretability makes it difficult for researchers and practitioners to trust the results produced by these models.

3. Computational Cost

Training large neural networks can be computationally expensive and time-consuming.

How do neural networks compare to other machine learning techniques?

Neural networks are just one type of machine learning technique used for classification or regression tasks. Other popular techniques include decision trees, support vector machines (SVMs), linear regression, logistic regression, k-nearest neighbors (KNN), and random forests. While each technique has its strengths and weaknesses, neural networks are particularly well-suited for solving complex problems with large amounts of data.

One of the main advantages of neural networks over other machine learning techniques is their ability to learn complex non-linear relationships between inputs and outputs. Neural networks can identify patterns in data that may be difficult or impossible to detect using other methods. Additionally, neural networks can handle both structured and unstructured data, making them versatile tools for a wide range of applications.

However, there are also some drawbacks to using neural networks compared to other machine learning techniques. For example, decision trees are often more interpretable than neural networks because they produce a clear set of rules that can be easily understood by humans. SVMs are also known for their ability to handle high-dimensional data efficiently and effectively.

Comparison of Neural Networks to Other Machine Learning Techniques:

1. Strengths

- Ability to learn complex non-linear relationships

- Versatility in handling structured and unstructured data

2. Weaknesses

- Less interpretable than some other techniques (e.g., decision trees)

- May not always be the most efficient or effective method for high-dimensional data (e.g., SVMs)

Can a single neural network solve multiple problems simultaneously?

Neural networks can be designed to solve a variety of different problems such as image classification, language translation, speech recognition, and anomaly detection. However, it is generally not recommended to try to solve multiple unrelated problems with a single neural network model.

The reason for this is that each problem requires different types of input features, output targets, and optimization objectives. Attempting to combine these different requirements into a single model can lead to poor performance and increased complexity. Instead, it is typically better to train separate neural network models for each problem and then combine their outputs using an ensemble method.

There are some exceptions to this rule, however. For example, transfer learning is a technique that allows a pre-trained neural network model to be adapted for use on a new but related problem. In this case, the pre-trained model can be fine-tuned on the new data with relatively few additional training examples.

Can a Single Neural Network Solve Multiple Problems Simultaneously?

It is generally not recommended to try to solve multiple unrelated problems with a single neural network model.

Exceptions:

- Transfer Learning – Allows a pre-trained neural network model to be adapted for use on a new but related problem.

How do researchers evaluate the effectiveness of a neural network model?

Evaluating the effectiveness of a neural network model typically involves measuring its performance on a set of test data that was not used during training. The most common evaluation metrics used for classification tasks include accuracy, precision, recall, and F1 score. For regression tasks, metrics such as mean squared error (MSE) or mean absolute error (MAE) are often used.

In addition to these standard metrics, researchers may also evaluate the robustness of their models by testing them on adversarial examples or noisy data. Adversarial examples are inputs that have been intentionally modified in small ways to cause the model to make incorrect predictions. Testing models on adversarial examples can help researchers identify potential weaknesses in their models and improve their overall performance.

Finally, it is important for researchers to report not only their final evaluation metrics but also how they arrived at those metrics. This includes details about the data preprocessing steps taken, hyperparameter tuning methods used, and any other relevant experimental details.

Evaluation of Neural Network Models:

1. Classification Tasks

- Accuracy

- Precision

- Recall

- F1 Score

2. Regression Tasks

- Mean Squared Error (MSE)

- Mean Absolute Error (MAE)

3. Robustness Testing

Testing models on adversarial examples or noisy data to identify potential weaknesses and improve overall performance.

Can we trust the decisions made by a neural network model without understanding how it works?

The black-box nature of neural networks can make it challenging to understand how they arrive at their decisions. This lack of interpretability can be a significant concern, particularly in applications where the consequences of incorrect predictions are severe, such as in healthcare or finance.

However, there are several techniques that researchers can use to increase the transparency and interpretability of their models. One such technique is feature visualization, which involves visualizing the patterns learned by the model in order to gain insight into how it is making its predictions. Another technique is layer-wise relevance propagation (LRP), which allows researchers to trace back the contribution of each input feature to the final output.

Ultimately, while it may be possible to trust the decisions made by a neural network model without fully understanding how it works, doing so requires careful consideration and validation. In high-stakes applications, it is usually necessary to have some level of interpretability in order to ensure that decisions are fair and unbiased.

Trustworthiness of Neural Network Decisions:

The black-box nature of neural networks can make it challenging to understand how they arrive at their decisions.

Techniques for Increasing Transparency and Interpretability:

- Feature Visualization

- Layer-wise Relevance Propagation (LRP)

Considerations for High-Stakes Applications:

In high-stakes applications, it is usually necessary to have some level of interpretability in order to ensure that decisions are fair and unbiased.

Are there any ethical concerns with using neural networks in decision-making processes?

The use of neural networks in decision-making processes can raise several ethical concerns. One major concern is the potential for bias and discrimination. Neural networks learn from the data they are trained on, which means that if the training data contains biases or discriminatory patterns, these may be perpetuated by the model.

Another concern is the lack of transparency and interpretability of neural network models. As mentioned previously, this can make it difficult to understand how decisions are being made and can lead to mistrust or skepticism about the fairness of those decisions.

Finally, there are also concerns around privacy and security when using neural network models. These models often require large amounts of data to be collected and stored, which raises questions about who has access to that data and how it is being used.

Ethical Concerns with Using Neural Networks in Decision-Making Processes:

1. Bias and Discrimination

If the training data contains biases or discriminatory patterns, these may be perpetuated by the model.

2. Lack of Transparency and Interpretability

This can make it difficult to understand how decisions are being made and can lead to mistrust or skepticism about the fairness of those decisions.

3. Privacy and Security

The collection and storage of large amounts of data raise questions about who has access to that data and how it is being used.

What advancements have been made recently in improving the performance and efficiency of neural networks?

In recent years, there have been several advancements in improving the performance and efficiency of neural networks. One major area of focus has been on developing new architectures that are better suited for specific types of problems. For example, convolutional neural networks (CNNs) have been particularly successful in image classification tasks, while recurrent neural networks (RNNs) have been used for natural language processing.

Another area of focus has been on developing new training techniques that can improve the speed and stability of training large neural network models. One such technique is batch normalization, which involves normalizing the inputs to each layer in a way that helps prevent overfitting and improves convergence.

Finally, researchers have also been exploring ways to optimize the hardware used to train and run neural network models. This includes developing specialized hardware such as graphics processing units (GPUs) or tensor processing units (TPUs), which can significantly speed up training times.

Advancements in Improving Neural Network Performance and Efficiency:

1. New Architectures

New architectures are being developed that are better suited for specific types of problems.

2. New Training Techniques

New training techniques such as batch normalization can improve the speed and stability of training large neural network models.

3. Hardware Optimization

Specialized hardware such as GPUs or TPUs can significantly speed up training times.

In conclusion, neural networks have proven to be highly effective in solving complex problems and making accurate predictions. However, their success depends on the quality of data they are trained on and the expertise of those developing them. If you’re interested in exploring the potential of AI for your business, we invite you to get in touch with us and check out our range of AI services. Let’s work together to unlock the power of neural networks!

Does a neural network work?

Neural networks can assist computers in making intelligent decisions even with limited human input by learning and modeling complex nonlinear relationships between input and output data.

Can a neural network reach 100% accuracy?

If a scatter plot displays blue and red points with a line connecting them, and a neural network accurately predicts the correct placement of the line, then it is feasible for the network to have a 100% accuracy rate. This statement was made on June 18, 2016.

What is a downside of neural networks?

Compared to traditional machine learning algorithms, neural networks typically require a greater amount of data, often ranging from thousands to millions of labeled samples. However, this can be a challenging issue to address, and other algorithms may be more effective in solving machine learning problems with less data available.

Are neural networks really like the brain?

Neural networks, which are computing systems inspired by the structure of the human brain, are the foundation for a wide range of artificial intelligence technologies such as speech recognition, medical imaging analysis, and computer vision.

What is the success rate of neural networks?

According to Chang and Chen (2016), modern neural networks have achieved a remarkably high accuracy rate of over 99.5% in correctly identifying validation examples. Nonetheless, this level of success has only been possible recently through the use of deep learning, particularly in the challenging case of MNIST. This information was reported on June 19, 2018.

Can you over train a neural network?

When it comes to neural networks, the phenomenon of overtraining or overfitting can happen. This occurs when the neural network is too advanced for the given task, causing it to memorize the information, including any irrelevant noise, instead of recognizing the underlying pattern in the data.