In recent years, machine learning has emerged as a powerful tool for data analysis and decision-making. However, the question remains whether or not machine learning requires GPU (Graphics Processing Unit) to operate efficiently. This article explores the importance of GPU in machine learning and its impact on performance.

What is machine learning?

Definition

Machine learning is a subset of artificial intelligence that involves training computer algorithms to learn from data and make predictions or decisions without being explicitly programmed. It is based on the idea that machines can learn and improve on their own by analyzing large amounts of data.

Examples

One of the most common examples of machine learning in action is recommendation systems used by companies like Netflix and Amazon. These systems use algorithms to analyze user data such as viewing history, ratings, and search queries to suggest relevant content or products.

Another example of machine learning is image recognition software used in self-driving cars. The software uses deep learning algorithms to analyze visual data from cameras mounted on the car to identify objects such as pedestrians, traffic lights, and other vehicles.

Applications

Machine learning has numerous applications across various industries. Some examples include:

– Fraud detection in banking and finance

– Predictive maintenance in manufacturing

– Personalized marketing in e-commerce

– Medical diagnosis in healthcare

How does machine learning work?

Data collection

The first step in building a machine learning model is collecting relevant data. This can be done through various sources such as sensors, databases, or web scraping.

Data preprocessing

Once the data has been collected, it needs to be cleaned and preprocessed before it can be used for training a model. This involves tasks such as removing duplicates, handling missing values, and converting categorical variables into numerical ones.

Model selection

The next step is selecting an appropriate machine learning algorithm based on the type of problem you are trying to solve. There are several types of algorithms such as regression, classification, clustering, and deep learning.

Training

After selecting an algorithm, the model needs to be trained on the data. This involves feeding the algorithm with labeled data and adjusting its parameters until it can make accurate predictions or decisions.

Evaluation

Once the model has been trained, it needs to be evaluated on a separate set of data to test its accuracy and performance. This helps identify any issues or areas for improvement.

Prediction

Finally, the trained model can be used to make predictions or decisions on new data that it hasn’t seen before.

Benefits of using machine learning

Efficiency

Machine learning can automate repetitive tasks, saving time and resources. For example, chatbots powered by machine learning algorithms can handle customer inquiries 24/7 without human intervention.

Accuracy

Machine learning models can analyze large amounts of data and make predictions with high accuracy. This is particularly useful in fields such as healthcare where accurate diagnosis is critical.

Personalization

Machine learning algorithms can personalize recommendations based on user behavior and preferences. This leads to a better user experience and higher engagement.

Different types of machine learning algorithms

Supervised learning

In supervised learning, the algorithm is trained on labeled data where each input is associated with a corresponding output. The goal is to learn a mapping function that can accurately predict outputs for new inputs.

Examples:

– Regression: predicting house prices based on features such as location, size, and number of bedrooms.

– Classification: identifying whether an email is spam or not based on its content.

Unsupervised learning

In unsupervised learning, the algorithm is trained on unlabeled data where there are no predefined outputs. The goal is to discover patterns or relationships within the data.

Examples:

– Clustering: grouping similar customers based on their purchase history.

– Dimensionality reduction: reducing the number of features in a dataset while retaining its important characteristics.

Reinforcement learning

In reinforcement learning, the algorithm learns by interacting with an environment and receiving feedback in the form of rewards or punishments. The goal is to learn a policy that maximizes the cumulative reward over time.

Examples:

– Game playing: training an AI agent to play a game such as chess or Go.

– Robotics: teaching a robot to perform tasks such as grasping objects or navigating through an environment.

Training a machine learning model

Data preparation

The first step in training a machine learning model is preparing the data. This involves cleaning and preprocessing the data to ensure it is suitable for training.

Model selection

Next, you need to select an appropriate machine learning algorithm based on the type of problem you are trying to solve.

Hyperparameter tuning

Once you have selected an algorithm, you need to tune its hyperparameters. Hyperparameters are parameters that are not learned during training but affect the behavior of the model. Examples include learning rate, batch size, and number of hidden layers.

Training and validation

After selecting hyperparameters, you can train your model on a portion of the data and validate it on another portion. This helps prevent overfitting where the model memorizes the training data instead of generalizing to new data.

CPU vs GPU for machine learning

Definition

A CPU (central processing unit) is a general-purpose processor that handles most operations in a computer. A GPU (graphics processing unit) is specialized hardware designed for parallel processing tasks such as rendering graphics and accelerating machine learning algorithms.

Advantages of using GPUs for machine learning over CPUs

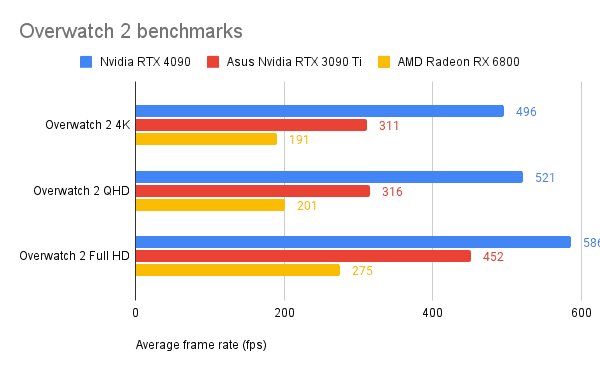

– Parallel processing: GPUs can perform many calculations simultaneously, making them much faster than CPUs for certain tasks such as matrix multiplication.

– Memory bandwidth: GPUs have much higher memory bandwidth than CPUs, allowing them to handle large amounts of data more efficiently.

– Cost-effectiveness: GPUs are generally more cost-effective than CPUs for machine learning tasks due to their superior performance.

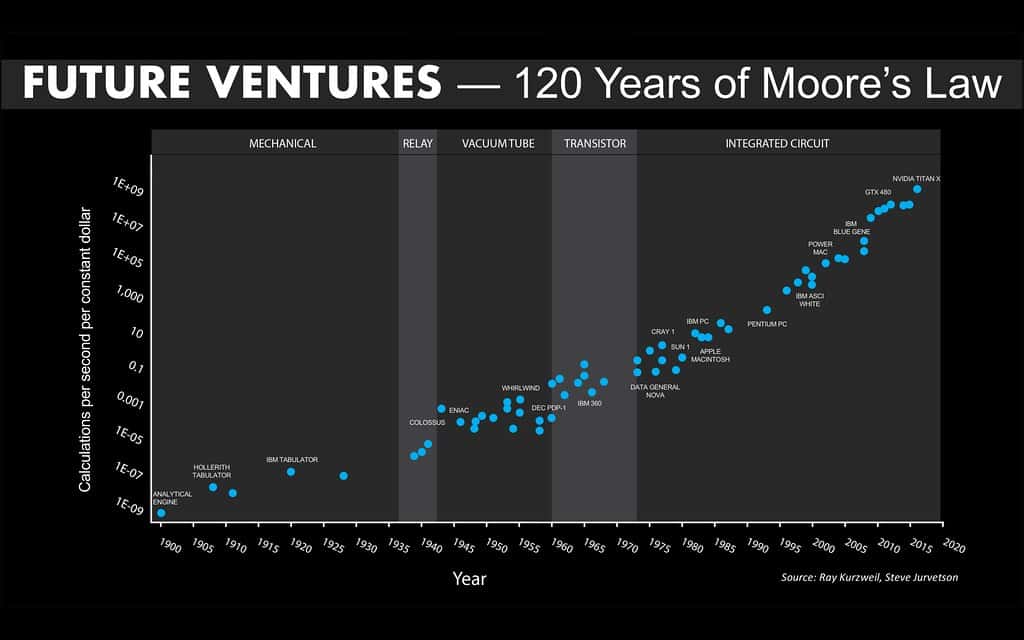

Speed difference between training on a GPU vs CPU

The speed difference between training on a GPU vs CPU depends on the specific task and hardware used. In general, GPUs can be several times faster than CPUs for deep learning tasks that involve large amounts of data and complex models.

Do you need a high-end GPU for machine learning?

You don’t necessarily need a high-end GPU for machine learning, but it can significantly improve performance. The type of GPU you need depends on the size of your dataset, complexity of your model, and budget.

Disadvantages of using GPUs for machine learning

– Power consumption: GPUs consume more power than CPUs, leading to higher energy costs.

– Limited compatibility: Some software libraries may not be compatible with certain GPU architectures or require additional configuration.

– Hardware limitations: Some older or lower-end GPUs may not have enough memory or processing power to handle certain tasks.

Using cloud-based GPUs for machine learning instead of buying hardware

Definition

Cloud-based GPUs refer to renting virtual machines with GPU hardware from cloud service providers such as Amazon Web Services (AWS), Google Cloud Platform (GCP), or Microsoft Azure.

Benefits

– Cost-effective: Renting cloud-based GPUs can be more cost-effective than buying and maintaining your own hardware.

– Scalability: Cloud-based GPUs can be scaled up or down based on your needs, allowing you to handle large amounts of data and complex models.

– Accessibility: Cloud-based GPUs can be accessed from anywhere with an internet connection, making it easier to collaborate with others and work remotely.

The cost of buying and setting up your own GPU for machine learning purposes

Hardware costs

The cost of buying a GPU for machine learning depends on the type and brand. High-end GPUs such as the NVIDIA Titan RTX can cost over $2,000 while mid-range GPUs such as the NVIDIA GeForce GTX 1660 Super can cost around $250.

Additional costs

In addition to hardware costs, there may be additional costs associated with setting up your own GPU for machine learning such as:

– Power consumption: Running a high-end GPU can increase your electricity bill significantly.

– Cooling: GPUs generate a lot of heat and require adequate cooling to prevent overheating.

– Maintenance: Over time, hardware may need to be replaced or upgraded.

Alternatives to using GPUs for machine learning, such as FPGAs or TPUs

FPGAs

FPGAs (field-programmable gate arrays) are specialized hardware that can be programmed to perform specific tasks. They offer similar advantages to GPUs in terms of parallel processing but are more flexible and customizable.

Advantages:

– Flexibility: FPGAs can be reprogrammed for different tasks, making them more versatile than dedicated hardware.

– Energy efficiency: FPGAs consume less power than CPUs or GPUs for certain tasks due to their parallel computing architecture.

Disadvantages:

– Complexity: Programming FPGAs requires specialized knowledge and skills.

– Cost: FPGAs are generally more expensive than GPUs or CPUs.

TPUs

TPUs (tensor processing units) are specialized hardware developed by Google for accelerating machine learning workloads. They offer even higher performance than GPUs for certain tasks such as deep learning.

Advantages:

– Speed: TPUs can be several times faster than GPUs for certain tasks due to their optimized architecture.

– Energy efficiency: TPUs consume less power than CPUs or GPUs for certain tasks due to their specialized design.

Disadvantages:

– Limited compatibility: TPUs are only compatible with TensorFlow, a popular machine learning framework developed by Google.

– Availability: TPUs are only available through Google Cloud Platform and may not be accessible in all regions.

Will future advancements in technology make GPUs obsolete for machine learning?

It’s unlikely that GPUs will become obsolete for machine learning anytime soon. While newer technologies such as FPGAs and TPUs offer higher performance and energy efficiency, they are still more expensive and less widely available than GPUs. Additionally, the demand for machine learning continues to grow rapidly, which means there will always be a need for powerful hardware to handle large amounts of data and complex models. However, it’s possible that future advancements in hardware and software could make GPU-based machine learning more efficient and accessible.

Using cloud-based GPUs for machine learning instead of buying hardware

Cloud-based GPUs have become increasingly popular in recent years as a cost-effective and flexible alternative to purchasing and maintaining your own hardware. With cloud-based solutions, you can easily scale up or down depending on your needs, without having to worry about the upfront costs associated with buying and setting up your own GPU.

Advantages of Cloud-Based GPUs

- Flexibility: You can easily scale up or down depending on your needs without having to worry about investing in new hardware.

- Cost-Effective: You only pay for what you use, rather than investing in expensive hardware that may not be utilized fully.

- Ease of Use: Setting up a cloud-based GPU is often much easier than setting up your own hardware, especially if you are not familiar with the technical aspects of GPU installation and maintenance.

Disadvantages of Cloud-Based GPUs

- Limited Control: When using a cloud-based solution, you may have limited control over the underlying infrastructure, which could impact performance or security.

- Data Privacy Concerns: Storing sensitive data on a third-party server may raise concerns over data privacy and security.

- Cost Over Time: While cloud-based solutions may be cost-effective initially, the cost can add up over time if usage is high or if there are unexpected spikes in demand.

The cost of buying and setting up your own GPU for machine learning purposes

The cost of purchasing and setting up your own GPU for machine learning purposes can vary widely depending on several factors. These include the type of GPU you choose, its processing power, memory capacity, cooling system, and other hardware components. Additionally, you will need to consider the cost of software licenses, maintenance, and electricity consumption.

Factors Affecting Cost

- Type of GPU: Different types of GPUs have varying costs and processing power. Gaming GPUs may be less expensive but may not be optimized for machine learning workloads.

- Memory Capacity: The more memory a GPU has, the more expensive it is likely to be.

- Cooling System: High-performance GPUs generate a lot of heat, which requires an effective cooling system that can add to the overall cost.

- Software Licenses: Some machine learning software requires a license fee, which can add to the overall cost of setting up a GPU for machine learning purposes.

Alternatives to using GPUs for machine learning, such as FPGAs or TPUs

FPGAs (Field Programmable Gate Arrays) and TPUs (Tensor Processing Units) are two alternatives to using GPUs for machine learning workloads. FPGAs are programmable chips that can be customized to perform specific tasks efficiently. TPUs are specialized hardware designed by Google specifically for running TensorFlow-based machine learning models.

Advantages of FPGAs and TPUs

- FPGAs offer flexibility in terms of customization and optimization for specific tasks.

- TPUs are designed specifically for TensorFlow-based models and offer faster performance than traditional CPUs or GPUs.

Disadvantages of FPGAs and TPUs

- FPGAs require specialized knowledge in programming and optimization, which can make them difficult to use without expertise in this area.

- TPUs are not widely available outside of Google’s cloud platform, which can limit their accessibility.

Will future advancements in technology make GPUs obsolete for machine learning?

While it is difficult to predict the future of technology, it is unlikely that GPUs will become obsolete for machine learning purposes anytime soon. This is because GPUs offer a high degree of flexibility and performance that other alternatives may not be able to match. Additionally, GPU manufacturers are constantly innovating and improving their products to meet the demands of the rapidly growing machine learning market.

Possible Future Advancements in GPU Technology

- Increased Memory Capacity: GPUs with larger memory capacities could allow for more complex models and larger datasets to be processed efficiently.

- Improved Energy Efficiency: Energy-efficient GPUs could reduce the overall cost of running machine learning workloads while also reducing environmental impact.

- Integration with Cloud-Based Solutions: Greater integration between GPUs and cloud-based solutions could further enhance flexibility and scalability for users.

Benefits of Regular Exercise

Regular exercise has numerous benefits for both physical and mental health. One major benefit is weight management, as exercise helps to burn calories and maintain a healthy body weight. It can also improve cardiovascular health by strengthening the heart and reducing the risk of heart disease.

In addition, regular exercise can boost mood and reduce symptoms of anxiety and depression. This is because exercise releases endorphins, which are natural feel-good hormones that can improve mood and reduce stress levels. Exercise can also improve cognitive function, including memory and concentration.

Types of Exercise

There are many different types of exercise that can be incorporated into a regular fitness routine. Aerobic exercise, such as running or cycling, helps to increase cardiovascular endurance and burn calories. Strength training exercises, such as weightlifting or resistance band workouts, help to build muscle mass and increase strength.

Flexibility exercises like yoga or stretching can improve range of motion and reduce the risk of injury. High-intensity interval training (HIIT) combines aerobic and strength training for a full-body workout in a shorter amount of time.

Tips for Staying Motivated

Staying motivated to exercise regularly can be challenging, but there are several strategies that can help. Setting specific goals, such as running a 5K or completing a certain number of push-ups, can provide motivation to keep working towards those goals.

Finding an accountability partner or joining a fitness group can also provide support and encouragement to stay on track with an exercise routine. Mixing up the types of exercises done each week can prevent boredom and keep things interesting.

Finally, it’s important to remember that progress takes time – don’t get discouraged if results aren’t immediate. Celebrate small victories along the way to stay motivated on the journey towards better health through regular exercise.

In conclusion, machine learning algorithms can certainly benefit from the use of GPUs. With their ability to handle large amounts of data and perform complex calculations at lightning-fast speeds, GPUs are a valuable tool for any AI developer. If you’re interested in exploring the world of machine learning or need help optimizing your existing models, don’t hesitate to get in touch with us. Our team of experts is here to help you achieve your goals and unlock the full potential of artificial intelligence. Check out our AI services today!

Can machine learning be done without GPU?

As previously mentioned, it is possible to learn machine learning concepts on a laptop that does not have a graphics processing unit (GPU). In such cases, the central processing unit (CPU) will handle all tasks. While using a CPU for machine learning studies is adequate, larger datasets will require a high-quality GPU to efficiently process operations.

Does machine learning require CPU or GPU?

In summary, machine learning procedures involve the use of both CPUs and GPUs. GPUs are utilized in the training of large deep learning models, while CPUs are useful in data preparation, feature extraction, and small-scale models. Both CPUs and GPUs can be used for inference and hyperparameter tweaking.

Does AI and ML require GPU?

Is a professional video card necessary for machine learning and AI? No, the NVIDIA GeForce RTX 3080, 3080 Ti, and 3090 are suitable for this type of workload. However, for systems with three or four GPUs, the “pro” series RTX A5000 and A6000 with high-memory and cooling capabilities are the best options.

Do I need a GPU for TensorFlow?

The TensorFlow software allows for computation to take place on a range of device types such as central processing units (CPU) and graphics processing units (GPU). This capability was made available on December 15, 2022.

Is graphic card necessary for Python?

When it comes to coding, having a dedicated graphics card is not essential, so it’s more cost-effective to opt for an integrated graphics card and use the money saved to invest in a better processor or an SSD, which will offer better value for your money. This advice was given on July 25, 2017.

What is the disadvantage of using GPU for machine learning?

GPUs are costly and have limited storage capacity. The process of moving data to and from the GPU can negate the benefits of parallelization. CPUs are less parallelizable but more adaptable. GPUs are more parallelizable but less adaptable.